|

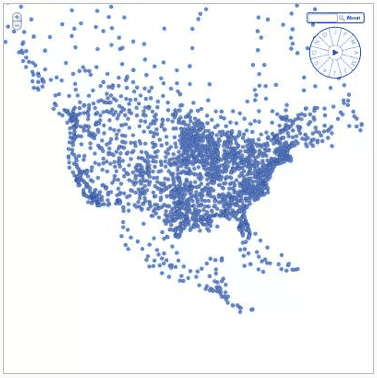

This is interesting: Google's preview for my Wind History project shows they executed quite a lot of Javascript and SVG code.

Related, a few weeks back Matt Cuts tweeted that Googlebot is executing some Javascript when indexing. (See this analysis and my earlier comments) It's worth noting that the Googlebot indexer is almost certainly an entirely different system from the preview image generator. I suspect the index building crawl still runs very little Javascript code and almost certainly doesn't render pixels; speed is important for freshness. (A remaining puzzle: why the image tiles from Open Street Map aren't in that preview. They're also loaded in Javascript. They may have loaded too slowly, or maybe the preview generator doesn't load content from external domains?) |

||